Causal inference is a powerful tool in statistics and machine learning, allowing us to uncover the underlying causes and effects in complex systems. In this blog post, we will delve into the world of distribution-free causal inference, a methodology that offers flexibility and robustness when dealing with real-world data. By understanding the principles and techniques of distribution-free causal inference, we can make more accurate predictions and draw meaningful conclusions.

Understanding Distribution-Free Causal Inference

Distribution-free causal inference, also known as non-parametric causal inference, is an approach that does not rely on strong assumptions about the underlying data distribution. Unlike traditional methods that assume specific parametric models, distribution-free inference focuses on making causal inferences directly from the data, without imposing strict distributional constraints.

This approach is particularly valuable when dealing with real-world datasets that may not follow a particular distribution or when the nature of the relationship between variables is complex and non-linear. By freeing ourselves from restrictive assumptions, we can explore a wider range of causal relationships and uncover hidden patterns.

Key Principles of Distribution-Free Causal Inference

Distribution-free causal inference is built upon several fundamental principles that guide its methodology:

- Counterfactuals: Causal inference heavily relies on counterfactual reasoning. We aim to understand what would have happened if a particular intervention or treatment had been applied. By comparing the observed outcomes with the counterfactual outcomes, we can infer causal relationships.

- Potential Outcomes: In causal inference, we consider potential outcomes for each unit (e.g., individual, group, or observation) under different treatment conditions. These potential outcomes represent the results that would have been observed if a specific treatment or intervention had been applied.

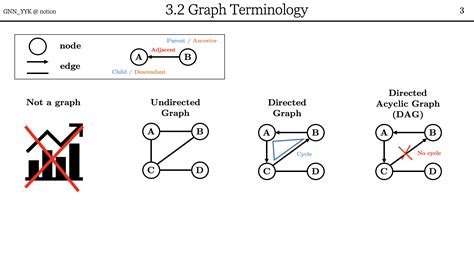

- Causal Graphs: Causal graphs, such as directed acyclic graphs (DAGs), provide a visual representation of the causal relationships between variables. They help us identify confounding variables, mediators, and effect modifiers, allowing us to make more accurate causal inferences.

- Causal Identification: Causal identification is the process of determining whether a causal effect can be estimated from the available data. It involves assessing the assumptions and conditions necessary for causal inference, such as the absence of unmeasured confounders and the presence of a valid instrumental variable.

Techniques for Distribution-Free Causal Inference

Distribution-free causal inference offers a variety of techniques and methods to estimate causal effects. Here are some commonly used approaches:

Propensity Score Matching (PSM)

Propensity score matching is a popular technique used to reduce bias in observational studies. It involves estimating the propensity score, which represents the probability of receiving a particular treatment given the observed covariates. By matching treated and untreated units based on their propensity scores, we can create comparable groups for causal inference.

| Treatment Group | Control Group |

|---|---|

| Treated units with similar propensity scores | Untreated units with similar propensity scores |

Instrumental Variables (IV)

Instrumental variables are used when the treatment assignment is not random and may be influenced by unobserved confounders. An instrumental variable is a variable that is correlated with the treatment but not directly with the outcome, except through the treatment. By using an instrumental variable, we can estimate the causal effect of the treatment on the outcome while controlling for potential confounders.

Difference-in-Differences (DiD)

Difference-in-Differences is a technique used to estimate the causal effect of a treatment or intervention by comparing the difference in outcomes between a treatment group and a control group over time. It assumes that the treatment and control groups would have followed similar trends in the absence of the treatment. By taking the difference in outcomes before and after the treatment, we can estimate the causal effect.

Regression Discontinuity Design (RDD)

Regression discontinuity design is a causal inference method that utilizes a threshold or cutoff point to assign treatments. It assumes that units just above and below the threshold are similar, allowing for a comparison of outcomes. By estimating the treatment effect at the threshold, we can infer the causal effect of the treatment.

Advantages of Distribution-Free Causal Inference

Distribution-free causal inference offers several advantages over traditional parametric approaches:

- Flexibility: Distribution-free methods are not restricted by specific distributional assumptions, making them more adaptable to various data types and complex relationships.

- Robustness: By avoiding strong assumptions, these methods are less sensitive to model misspecification and can handle non-linear and interactive effects.

- Increased Generalizability: Distribution-free inference focuses on the data itself, rather than relying on specific parametric models. This leads to more generalizable results that can be applied to a wider range of settings.

- Visual Interpretability: Causal graphs and counterfactual reasoning provide a visual and intuitive framework for understanding causal relationships, making the results more interpretable and easier to communicate.

Challenges and Considerations

While distribution-free causal inference offers many benefits, there are also challenges and considerations to keep in mind:

- Data Requirements: Distribution-free methods often require larger sample sizes compared to parametric approaches to achieve reliable estimates. Additionally, the data should be sufficiently rich to capture the complex relationships between variables.

- Causal Assumptions: Distribution-free inference still relies on certain causal assumptions, such as the absence of unmeasured confounders and the validity of instrumental variables. Violation of these assumptions can lead to biased estimates.

- Model Selection: Choosing the appropriate causal inference technique depends on the specific research question and the nature of the data. It requires careful consideration of the assumptions and limitations of each method.

Applications of Distribution-Free Causal Inference

Distribution-free causal inference has found applications in various fields, including:

- Healthcare: Understanding the causal effects of medical treatments, interventions, and policies on patient outcomes.

- Economics: Estimating the impact of economic policies, market interventions, and business strategies on economic outcomes.

- Social Sciences: Analyzing the causal relationships between social factors, such as education, income, and health outcomes.

- Marketing: Evaluating the effectiveness of marketing campaigns, advertising strategies, and customer engagement initiatives.

- Environmental Science: Studying the causal effects of environmental interventions, such as conservation efforts or pollution control measures.

Conclusion

Distribution-free causal inference provides a powerful framework for uncovering causal relationships in complex systems. By embracing flexibility and robustness, we can make more accurate predictions and draw meaningful conclusions from real-world data. Whether it's healthcare, economics, or social sciences, distribution-free causal inference offers valuable insights into the underlying causes and effects, enabling us to make informed decisions and drive positive change.

FAQ

What is the main advantage of distribution-free causal inference over parametric approaches?

+

Distribution-free causal inference offers flexibility and robustness by not relying on strong assumptions about the data distribution. This allows for a wider range of data types and complex relationships to be analyzed, leading to more generalizable results.

How does propensity score matching work in distribution-free causal inference?

+

Propensity score matching estimates the propensity score, which represents the probability of receiving a treatment given the observed covariates. By matching treated and untreated units based on their propensity scores, we create comparable groups for causal inference, reducing bias in observational studies.

What are the key assumptions in distribution-free causal inference?

+

Distribution-free causal inference relies on assumptions such as the absence of unmeasured confounders, the validity of instrumental variables, and the existence of a valid causal graph. Violation of these assumptions can affect the accuracy of causal estimates.

Can distribution-free causal inference be applied to all types of data?

+

Distribution-free causal inference is most suitable for data that exhibits complex relationships and non-linear interactions. It may require larger sample sizes and richer data to capture these complexities accurately.