Understanding Linear Regression

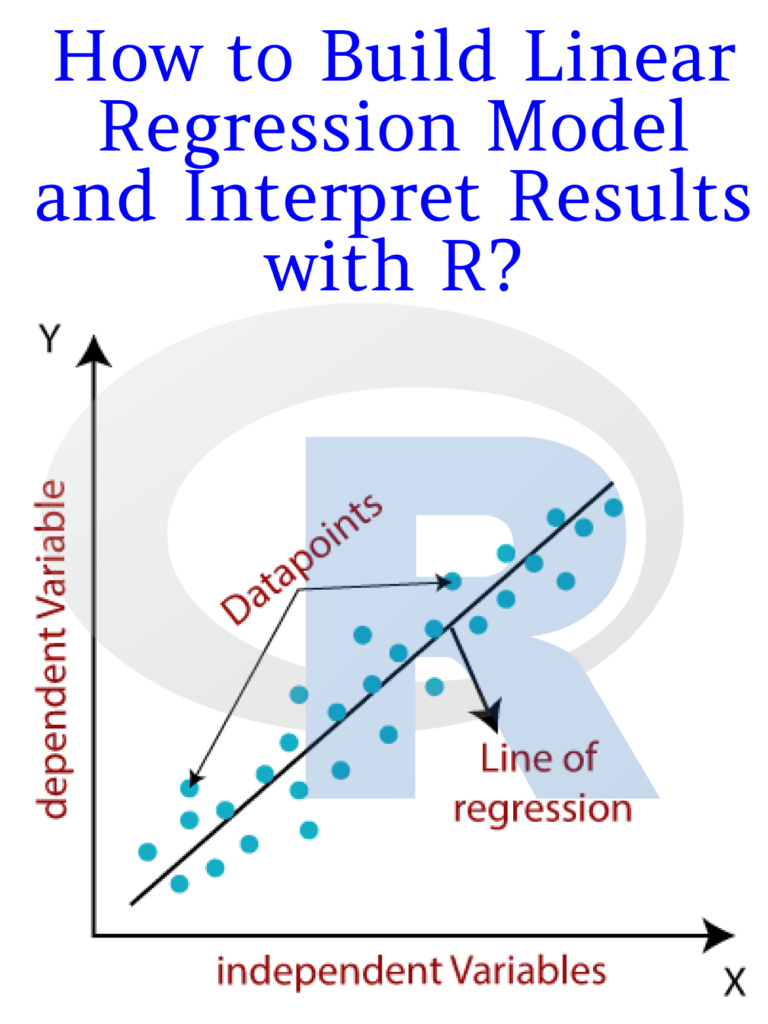

Linear regression is a fundamental statistical technique used to model the relationship between two variables: the predictor variable (often denoted as ‘x’) and the response or dependent variable (denoted as ‘y’). This method is commonly employed to predict or estimate the value of the response variable based on the known values of the predictor variable. The relationship is represented by a straight line, hence the term “linear.”

Implementation in R

In the R programming language, linear regression is a widely used technique due to its simplicity and versatility. The lm() function is the primary tool for conducting linear regression in R. This function takes the form of lm(formula, data), where the formula specifies the relationship between the predictor and response variables, and the data parameter refers to the dataset being used.

For instance, if we have a dataset named my_data containing columns ‘x’ and ‘y’, and we want to fit a linear regression model, we would use the following code:

# Load the dataset

my_data <- read.csv("my_data.csv")

# Fit the linear regression model

model <- lm(y ~ x, data = my_data)

Here, y ~ x is the formula specifying that the response variable ‘y’ is modeled as a function of the predictor variable ‘x’.

Interpreting the Results

Once the model is fitted, we can access various statistical measures to understand the relationship between the variables. These include:

Coefficients: The coefficients of the model represent the estimated change in the response variable for a one-unit change in the corresponding predictor variable, holding all other predictor variables constant. In the case of a simple linear regression with only one predictor variable, there will be two coefficients: the intercept (intercept term) and the slope (coefficient of the predictor variable).

Residuals: Residuals are the differences between the observed values of the response variable and the values predicted by the model. They provide insights into the goodness of fit of the model. Ideally, residuals should be randomly distributed around zero, indicating that the model adequately captures the relationship between the variables.

R-squared: The R-squared value, often denoted as R^2, is a measure of how well the model fits the data. It represents the proportion of the variance in the response variable that can be explained by the predictor variable(s). A higher R-squared value indicates a better fit. However, it’s important to note that R-squared alone does not provide information about the accuracy of predictions, and it can be influenced by the number of predictor variables in the model.

Model Evaluation and Validation

Evaluating the performance of a linear regression model is crucial to ensure its reliability and generalizability. This can be done through various techniques, including:

Residual Analysis: Examining the distribution of residuals can reveal potential issues with the model. For instance, if the residuals are not normally distributed or exhibit patterns, it may indicate that the model assumptions are violated.

Cross-Validation: This technique involves dividing the dataset into training and validation sets. The model is trained on the training set and then evaluated on the validation set to assess its performance on unseen data. This helps in estimating the model’s ability to generalize to new data points.

Hypothesis Testing: Statistical tests, such as the F-test and t-test, can be used to assess the significance of the predictor variables in the model. These tests help determine whether the observed relationship between the variables is statistically significant or merely due to random chance.

Advanced Topics

Linear regression in R can be extended to handle more complex scenarios and incorporate additional features. Some advanced topics include:

Multiple Linear Regression: This extends the simple linear regression to include multiple predictor variables. The formula for multiple linear regression would be

y ~ x1 + x2 + ... + xn, where ‘y’ is the response variable and ‘x1’, ‘x2’, …, ‘xn’ are the predictor variables.Polynomial Regression: When the relationship between the predictor and response variables is not linear, polynomial regression can be used. This involves transforming the predictor variable(s) into higher-degree polynomials to capture non-linear patterns.

Regularization Techniques: Regularization methods, such as Ridge and Lasso regression, are used to prevent overfitting in models with a large number of predictor variables. These techniques add a penalty term to the model’s cost function, which helps in selecting the most relevant predictor variables and improving the model’s generalization performance.

Visualizing Linear Regression

Visualizing the results of a linear regression model can provide valuable insights into the relationship between the variables. This can be done using scatter plots and line plots. For instance, we can visualize the relationship between ‘x’ and ‘y’ variables in the my_data dataset as follows:

# Create a scatter plot of x vs. y

plot(my_data$x, my_data$y,

xlab = "Predictor Variable (x)",

ylab = "Response Variable (y)",

main = "Scatter Plot of x vs. y")

# Add the regression line to the plot

abline(model, col = "red")

Conclusion

Linear regression is a powerful and widely used statistical technique for modeling the relationship between two variables. In R, the lm() function provides a simple and efficient way to conduct linear regression analysis. By understanding the underlying assumptions, interpreting the results, and employing appropriate model evaluation techniques, researchers and data analysts can leverage linear regression to make accurate predictions and gain valuable insights from their data.

FAQ

What is the primary function used for linear regression in R?

+

The primary function used for linear regression in R is lm(). It takes a formula and a dataset as arguments and returns a fitted linear regression model.

How can I interpret the coefficients of a linear regression model in R?

+

The coefficients of a linear regression model represent the estimated change in the response variable for a one-unit change in the corresponding predictor variable, holding all other predictor variables constant. The intercept term represents the predicted value of the response variable when all predictor variables are zero.

What is the purpose of residual analysis in linear regression?

+

Residual analysis is used to assess the goodness of fit of a linear regression model. By examining the distribution of residuals, we can identify potential issues with the model, such as non-linearity or violation of assumptions.

How can I perform multiple linear regression in R?

+

Multiple linear regression can be performed in R by including multiple predictor variables in the formula of the lm() function. For example, y ~ x1 + x2 + x3 represents a multiple linear regression model with three predictor variables.

What are some common assumptions of linear regression models in R?

+

Common assumptions of linear regression models include linearity, independence of errors, constant variance of errors, and normality of errors. It’s important to check these assumptions before interpreting the results of a linear regression model.