Pointwise mutual information (PMI) is a statistical measure used in natural language processing (NLP) and information theory to quantify the association between two events or variables. It provides insights into the strength of the relationship between specific words or phrases in a given context. PMI is particularly useful in various NLP tasks, such as text classification, sentiment analysis, and information retrieval, as it helps capture the semantic and contextual relationships between linguistic elements.

Understanding Pointwise Mutual Information

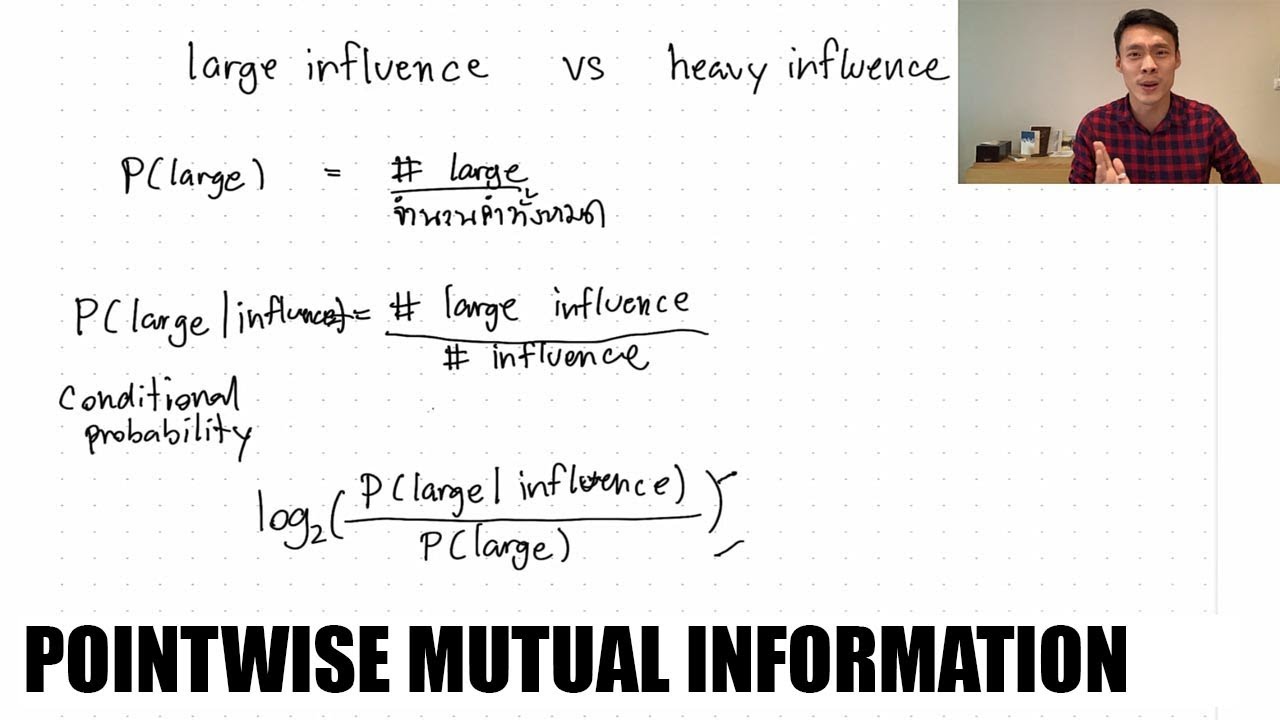

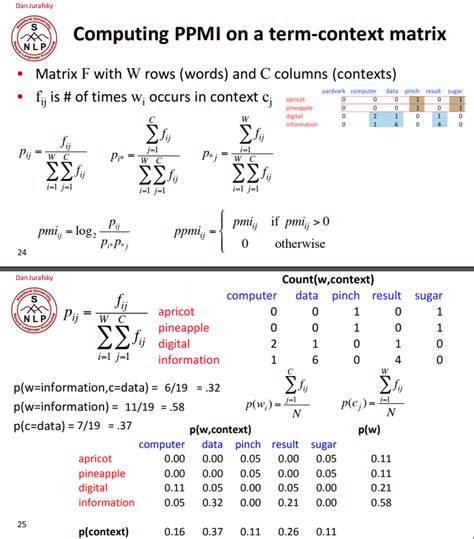

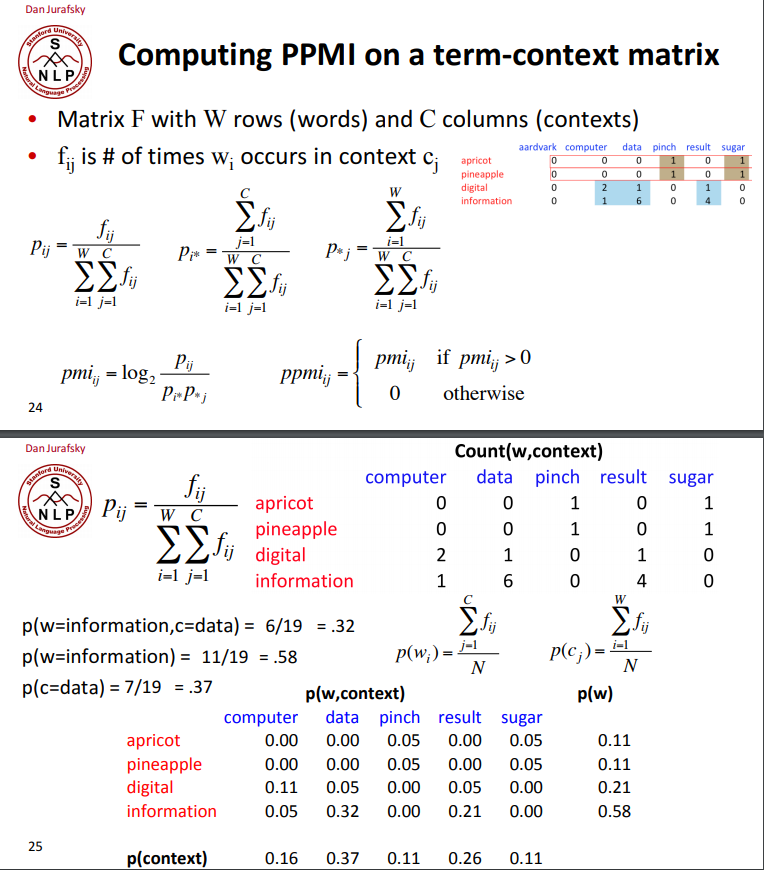

PMI calculates the logarithmic ratio of the probability of two events occurring together (joint probability) to the product of their individual probabilities. In the context of NLP, these events typically represent the co-occurrence of words or the presence of specific word pairs. By comparing the observed co-occurrence probability to the expected co-occurrence based on individual word probabilities, PMI captures the extent to which the co-occurrence of words deviates from random chance.

The formula for calculating PMI is as follows:

Where:

- PMI(w1, w2) represents the pointwise mutual information between words w1 and w2.

- P(w1, w2) is the joint probability of w1 and w2 occurring together.

- P(w1) and P(w2) are the individual probabilities of w1 and w2 occurring independently.

Interpreting PMI Values

PMI values can be interpreted as follows:

- Positive PMI: Indicates a positive association between the words. The higher the PMI value, the stronger the positive relationship.

- Negative PMI: Suggests a negative or inverse relationship between the words. Negative PMI values indicate that the words tend to avoid co-occurrence.

- Zero PMI: Implies no association between the words. The co-occurrence of the words is purely random and does not provide any additional information.

It's important to note that PMI values are sensitive to the context in which they are calculated. Different corpora or text collections may yield different PMI values for the same word pairs. Therefore, it is crucial to consider the specific domain or context when interpreting PMI results.

Applications of PMI in NLP

Text Classification

PMI is widely used in text classification tasks to identify relevant features or word combinations that distinguish different classes of text. By calculating PMI values for word pairs, it is possible to select informative features and build effective classification models.

Sentiment Analysis

In sentiment analysis, PMI helps capture the sentiment-laden relationships between words. By analyzing the PMI values of word pairs, it is possible to identify words that are strongly associated with positive or negative sentiments. This information can be utilized to develop sentiment analysis models that accurately classify text based on sentiment.

Information Retrieval

PMI is employed in information retrieval systems to improve query expansion and document ranking. By considering the PMI values of query terms and document terms, it is possible to identify relevant documents that contain informative word combinations. This enhances the accuracy and relevance of search results.

Advantages of PMI

- Contextual Understanding: PMI captures the contextual relationships between words, allowing for a more nuanced understanding of their associations.

- Feature Selection: PMI is effective in selecting relevant features for various NLP tasks, reducing the dimensionality of the feature space.

- Robustness: PMI is less sensitive to data sparsity issues compared to other similarity measures, making it suitable for handling large and diverse text collections.

Limitations of PMI

- Data Sparsity: PMI may struggle with data sparsity, especially when dealing with rare word pairs. In such cases, the estimated probabilities may be unreliable, leading to inaccurate PMI values.

- Context Dependency: PMI values are highly dependent on the context in which they are calculated. Changing the context or corpus can significantly impact the PMI values, requiring careful consideration of the specific application.

Alternative Measures: TF-IDF

While PMI is a valuable measure for capturing word associations, it is worth noting that other measures like Term Frequency-Inverse Document Frequency (TF-IDF) are also commonly used in NLP. TF-IDF takes into account both the frequency of a word in a document and its rarity across the entire corpus. It provides a measure of the importance of a word in a specific context.

Implementing PMI in Python

Implementing PMI in Python involves the following steps:

-

Tokenization: Break the text into individual words or tokens.

-

Create a co-occurrence matrix: Count the occurrences of word pairs in the text.

-

Calculate probabilities: Estimate the joint and individual probabilities of word pairs.

-

Compute PMI: Use the PMI formula to calculate the PMI values for each word pair.

Here's a simple example using the nltk library in Python to calculate PMI for a given text:

import nltk

from nltk import FreqDist

# Sample text

text = "The quick brown fox jumps over the lazy dog"

# Tokenize the text

tokens = nltk.word_tokenize(text)

# Create a frequency distribution of word pairs

word_pairs = FreqDist(nltk.bigrams(tokens))

# Calculate PMI for each word pair

for (word1, word2), freq in word_pairs.items():

joint_prob = freq / len(tokens)

word1_prob = word_pairs[word1] / len(tokens)

word2_prob = word_pairs[word2] / len(tokens)

pmi = nltk.log_likelihood(word1_prob, word2_prob, joint_prob)

print(f"PMI({word1}, {word2}) = {pmi}")

This code snippet demonstrates how to calculate PMI values for word pairs in a given text using the nltk library. The log_likelihood function from nltk is used to compute the PMI values.

Tips for Effective PMI Calculation

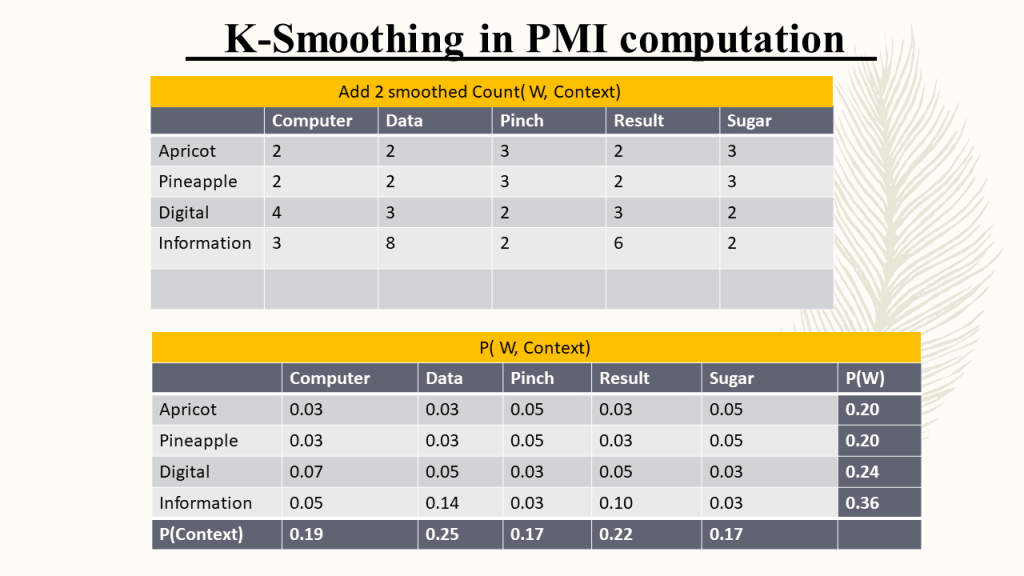

- Smoothing: Apply smoothing techniques to handle data sparsity and avoid dividing by zero when calculating probabilities.

- Contextualization: Consider the specific domain or context of the text to ensure accurate PMI calculations.

- Thresholding: Set a threshold for PMI values to filter out weak associations and focus on meaningful word pairs.

Pointwise Mutual Information is a powerful tool in NLP, providing insights into the relationships between words in a given context. By understanding and applying PMI effectively, researchers and practitioners can enhance various NLP tasks, leading to more accurate and contextually aware models.

Conclusion

In this blog post, we explored the concept of Pointwise Mutual Information and its significance in natural language processing. We discussed how PMI calculates the association between words, its interpretation, and its applications in text classification, sentiment analysis, and information retrieval. By leveraging PMI, NLP models can capture the semantic and contextual relationships between words, improving their performance and accuracy. As NLP continues to advance, PMI remains a valuable measure for understanding the nuances of language and enhancing various NLP applications.

What is the main purpose of using Pointwise Mutual Information in NLP tasks?

+

Pointwise Mutual Information is used to quantify the association between words in a given context. It helps identify strong and weak relationships between words, aiding in various NLP tasks such as text classification, sentiment analysis, and information retrieval.

How is PMI calculated, and what does it represent?

+

PMI is calculated using the formula: PMI(w1, w2) = log2 (P(w1, w2) / (P(w1) * P(w2))). It represents the logarithmic ratio of the joint probability of two words occurring together to the product of their individual probabilities. Positive PMI values indicate a positive association, negative values indicate a negative association, and zero PMI suggests no association.

What are some common applications of PMI in NLP?

+

PMI is widely used in text classification to identify relevant features, in sentiment analysis to capture sentiment-laden word relationships, and in information retrieval to improve query expansion and document ranking.

What are the advantages of using PMI in NLP tasks?

+

PMI provides a more nuanced understanding of word associations by capturing contextual relationships. It is effective in feature selection, reducing the dimensionality of the feature space. Additionally, PMI is less sensitive to data sparsity issues compared to other similarity measures.

What are some limitations of using PMI in NLP tasks?

+

PMI may struggle with data sparsity, especially when dealing with rare word pairs. It is also highly dependent on the context in which it is calculated, requiring careful consideration of the specific application and corpus.